Check out Abel Avram's Key Takeaway Points and Lessons Learned from QCon San Francisco 2011 article in which articles from this blog are quoted.

That is awesome!

This blog will be covering my attendance at the QCon 2011 conference in San Francisco, California from November 16 to 18. QCon is a software development conference on many useful topics which I will try my best to summarize through my posts. Please, feel free to post your comments and get some conversation started. Enjoy!

Monday, December 12, 2011

Friday, November 18, 2011

Final Impressions from QCon SF 2011

The past three days have been quite intense and I'm on the verge of a total information overload. All that aside, the QCon San Francisco International Software Development Conference was in my eyes a great success. Me and my work colleague came here on an information seeking mission and I can now say with great confidence that we have achieved our goal. In fact, we might have collected enough data to sift through for the next couple of weeks which will then package that in a form that is consumable in our work environment. The discussions and idea exchange will definitely continue for us but at a more local level. Some of the posts on this blog have been updated with new information as it was make available so don't be shy to re-read some of them. Also, for those would couldn't make to QCon SF in person, don't forget to check-out InfoQ.com in the coming weeks for videos of some of the presentations.

The past three days have been quite intense and I'm on the verge of a total information overload. All that aside, the QCon San Francisco International Software Development Conference was in my eyes a great success. Me and my work colleague came here on an information seeking mission and I can now say with great confidence that we have achieved our goal. In fact, we might have collected enough data to sift through for the next couple of weeks which will then package that in a form that is consumable in our work environment. The discussions and idea exchange will definitely continue for us but at a more local level. Some of the posts on this blog have been updated with new information as it was make available so don't be shy to re-read some of them. Also, for those would couldn't make to QCon SF in person, don't forget to check-out InfoQ.com in the coming weeks for videos of some of the presentations.A few numbers to put things into perspective, at the moment of the writing of this post, we have a count of over 950 page views coming from mostly from North America but also surprisingly from South America (Argentina) and Europe (France, Germany, Austria, United Kingdom, The Netherlands, Belgium and Ireland). I'm guessing the postings on Facebook, Twitter, LinkedIn and Google+ have helped that a little :) These numbers aren't that impressive by Internet standards but is a personal record for the little blogging that I do, #win!

On that note, we have to proceed with a quick extraction of the premises as our flight back home leaves in just a couple of hours. See you back in at the Mothership in Montreal!

|

| The Mothership! |

DevOps to NoOps - cloud APIs (MuleiON)

Presentation content changed to DevOps to NoOps from Integrating SaaS applications with Mule iON

The speaker wen through a discussion on APIs explosion, popular ones, the ones he thinks we should be considering in cloud application and how MuleiON can be used to properly decouple an application from all the cool cloud APIs discussed and presented.

Agenda

- impact of APIs

- top 10 you should be looking at

- integrating with APIs

The impact of APIs

Programmable Web web site follows API proliferation (doubling/tripling every year!)

APIs more and more seen as revenue streams

Architecture change

The traditional 3-tier architecture (client, server, data) is being decomposed so that 3rd party applications can start using middle-tier APIs and the Data-tier changed from a typical DB into SaaS, web services, social media APIs, etc. - the data no longer lives in one place but distributed in the cloud

Technology shift

Traditional Application Environements: Application/Web-App server/DB/OS

New Application Environments: Application/PaaS (in place of Web/App server)/IaaS (in place of DB and OS, ex: Amazon EC2)

Top 10 APIs by usage

- Google Maps

- YouTube

- Flicker

- Amazon eCommerce

- Twilio - Telephony service

- eBay

- Last.fm - Radio service

- Google search

Top 10 cloud APIs - choices from speaker

- Twilio: Telephony/SMS as a service - build text/sms/voice apps really easily (excellent API, takes seconds)

- DropBox: File Sharing as a service - share files between all your devices/friends/coworkers

- PubNub: Publish/Subscribe Messaging as a service - real-time, global messaging for cloud and mobile apps (as about as real-time as the web can get - low latency of about 55ms over web).

- Katasoft: Application Security as a service (now in Private BETA) - user account management, authentication and access control in your application

- AirBrake: Error Management as a service - interesting approach to error management, no need to hit the logs (can be connected to JIRA for example)

- MongoHQ: MongoDB as a service - create MongoDB in seconds

- SendGrid: email as a service - way better than normal email (like gmail) and no need to run an email server

- loggly: logging as a service - manage logs and enables easy search and navigation

- Amazon S3: file system as a service - simple API for storing both large and small files

- xeround (the cloud database): MySQL as a service - direct replacement to MySQL

see slides for all the alternatives/contenders as well.

The API challenge

All APIS are not equal

- Hard to work with different APIs (different approaches, security schemes, data formats, interpretations of REST)

- lots of custom code in your app.

iPaaS: Do not clutter your app

Use MuleiON (integration PaaS) for loose coupling your app to this cool stuff

Your killer app --> integration layer (ex: MuliON) --> cool stuff (Twitter, etc. all that we just listed above)

Benefits

- Configuration approach to APIs

- Handled for you (security, session management, streaming, http callbacks)

- consume and invoke multiple services, retries, error handling

Essentially, decouples and keeps your application code clean

DEMO

NetApp Case Study in Big Data

2 speakers, an Enterprise Architect from NetApp and Principal Architect from Think Big Analytics

Big Data is not really a problem of particular interests for CAE, however, the approach taken to tackle NetApp Big Data problem might be. They use a lot of Open Source systems to tackle their issues.

Presentation slides: not published

Agenda

Presentation slides: not published

Agenda

- NetApp big data challenge

- Technology assessment

- Drivers

AutoSupport Family

- catch issues before they become critical

- secure automated call-home service

- system monitoring and non intrusive alerting

- RMA request without customer action

- enables faster incident management

AutoSupport - Why does it matter?

see slide

Business challenges

Gateways

- Scale out and respect their SLAs

- 600K ASUPs per week

- 40% coming over the week end

- 5% growth per week

ETL

- data parsed and loaded in 15 min.

- only 5% goes in data warehouse, but even then, gwoing 6-8TB per month

- Oracle DBMS struggling

- no easy access to this unstructured data

reporting

- numerous mining request not met currently

Incoming AutoSupport Volumes and TB consumption

- Exponential flat file storage requirement

The Petri Dish

- NetApp has its own BigData challenges with AutoSupport Data

- Executive guidance was to chose the solution that solved our business problem first: independent of our own technologies

- NetApp product teams very interested in our decision making process (the in-house customer petri dish)\

ASUP Next - Proof of concept (POC) strategy

- don't swallow the elephant whole

- Use technical POCs to validate solution components

- Review the POCs results and determine the end state solution

- size the implementation costs using the results of the POCs

- Make vendor decisions based on facts!

Requirements Used for POC and RFP

- Cost effective

- Highly scalable

- Adaptive

- New Analytical capabilities

Technology Assessment

Essentially, they followed the following steps to validate their solutions

- Defined POC Use cases

- Did a Solution Comparison based on their technology survey

- They prototyped

- they benchmarked

AutoSupport: Hadoop Use case in POC

with a single 10 node hadoop cluster (on E-series with 60 disks of 2TB), they were able to change a 24 billion records query that took 4 weeks to load into a 10.5 hours load time and a previously impossible 240 billions query into one that now runs in only 18 hours!

This is why they chose Hadoop!

Solution Architecture

multiple slides on architectures, requirements, considerations.

Physical Hadoop Architecture

see slide for all details on disks, networks, machines, RAM, etc.

All their machines run on RedHat Entrerprise (RHEL) 5.6, using ext3 filesystem

Hadoop Architecture components

Flume: Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. Its main goal is to deliver data from applications to Hadoop’s HDFS.

- HDFS: Hadoop Distributed File System, is the primary storage system used by Hadoop applications.

- HBase: the Hadoop database to be used when you need random, realtime read/write access to your Big Data.

- MapReduce: a software framework introduced by Google in 2004 to support distributed computing on large data sets on clusters of computers.

- Pig: from Apache, it is a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with infrastructure for evaluating these programs.

Ingestion data flows

3 types of ASUPs (AutoSUPport)

- Actionable

- Weekly logs

- Performance data

10 flume servers for 200GB/hour

less than 1 minute for case creation

Performance benchmarks

see slides

DAS vs E-Series performance benchmark

E-series multi LUN configuration changes improved read-write I/O by 65%

Hadoop Benefits

- Scalability - by decouling computing from the storage

- Improved Performance - improved response time to meet their SLAs (faster than Oracle DBMS onunstructured data abd faster that tradiontal server-based storage

- Enterprise Robustness - grade protection of HDFS metadata

Takeaways

- NetApp assessed multiple traditional DB technologies to solve its Big data problem and determine Hadoop was the best fit.

- HW & SW short and long term costs were key in the decision to move to Hadoop on ESeries

HTML 5 Design and Development Tooling

Changed from "Rearchitecting amazon.com for the Cloud" - had enough Amazon sales speech last time...

New web motion and interaction design tool that allows designers to bring animated content to websites, using web standards like HTML 5, CSS 3, etc.

The Adobe CSS shaders proposals went via W3C (see here)

Kind of amazing how easy it was to develop animated web content applications and to deliver them to multiple devices!

Agenda

- Edge - Motion and Interaction Design Tools

- Webkit contributions - CSS Regions, CSS Shaders

- JQuery Mobile

- PhoneGap

- Flash News

- Live Demo - quite powerful tool (produces Java Script)

Webkit contributions

- allows effects on any elements of the DOM

- Live demo with Chromium (which implemented this spec) using CSS Shaders studio

- wurp, fold effects kind of cool

- works directly on actual HTML content (videos, animations, selections, all still work in the animated pages) - example given with untouched Google maps site embedded in animation some animations/effects

- real cool and easy to use to add artistic elements to standard web content

- shaders not already available - pending standard publication

JQuery mobile + PhoneGap

- can run as an app or withing the browserit creates a native shell with a web view which load the web content or application

- used to publish to android, ios, etc.

- demo showing how to package web content from a browser to IOs by using XCode PhoneGap extensions

- Xcode=Apple's IDE for IOS applications

- saw an IPad simulator showing how the app would look on an actual IPad

- Then an IPhone simulator was only a click away.

- generated application deployable to AppStore for example.

- he then tested in on an actual device instead of than on a simulator

- very easy to test applications for various platforms/devices

- he then tried it on an Android phone (with a produced android app output from Eclipse instead of XCode)

2 problems with what was demoed so far

- multiple IDEs/tools for multiple target platforms (Xcode for IOS, Eclipse for Android, windows, etc.)

- debugging remote html applications when it runs on a device

for the later problem, they developed the Web Inspector Remote tool (called also weinre), similar to web application debuggers such as the one in Chrome, Firebug, etc.)

Flash news

- Adobe painted as non HTML company... which they claim is not the case

- They think Flash and Flex are still useful in certain cases (pear to pear application for examples - ex: game with 2 players, another ex: enterprise application: ) like real-time data streaming, collaboration sessions between multiple users/devices, etc.

Conclusion

Building an ecosystem for hackers

Session previously called "DVCS for agile teams" has been cancelled and changed to "Building an ecosystem for hackers" given by a speaker from Atlassian

The software life-cycle

It goes from ideas, to prototype, to code, to shipping the product and rinse and repeat until your product is bloated and the code begins to stink!

Conventional wisdom

- Users are stupid

- Software is hard

- Supporting software sucks

- We want simple

How can we empower users

- Build opinionated software (if you do not like it, don't buy it - but you are thus building a fence around your product)

- Build a platform they can hack on (you allow you customers to get involved)

Two schools of thoughts

- Product #1: customers are sand-boxed in the environment

- Product #2: customers build against APIs (REST)

Who does #1 well

- Apple

- Android

- Atlassian

- Chrome/Firefox

- WordPress

- Many others - you know it is well done when companies can start living within the ecosystem

How do they do this at Atlassian

- you start with a plugin framework (they adopted an OSGi based plugin system)

- Speakeasy is a framework allowing to create javascripts/CSS and attach them to Atlassian elements (ex: JIRA, etc.) - it runs a little GIT server within permitting to see extensions sources, fork from them, publish updates, etc.

Demo

- Using JIRA - he used a little "kick ass" extension permitting to kill bugs in the agile view.

Pimp it with REST

- Many REST APIs built in Atlassian

- They are built as extensions using the extensions framework using Jersey (Java REST library), which gives a WADL (Web Application Description Language) file.

- The provided SDK tools offers among other things a command line interface to automate all that is needed.

I have built my plugin, now what

The provide the Plugin Exchange platform to exchange plugins (soon, that will support selling plugins like the AppStore)

Hey, we have got a community!

- Atlassian uses contests to have developers start to use developed extensions mechanisms

- They also host code contests, once a year, to encourage people developing new plugins (ex: JIRA Hero)

- They host conferences for developers (ex: AtlasCamp) - online community of good, but face-to-face events are better!

- The fun elements is required for the ecosystem to actually live.

In the end

People want to contribute to your product, but you need to give the ways to do it!

Automating (almost) everything using Git, Gerrit, Hudson and Mylyn

A presentation on how good open source tools can be integrated together to push development efficiency up.

First, here are all the involved tools references

Git (Distributed Version Control System-DVCS tool)

Gerrit (Code Review tool)

Mylyn (Eclipse-based Application Lifecycle Management-ALM tool)

Why GIT?

- DVCS

- Open Source

- Airplane-friendly (all commits can be done offline since in DVCS, commits are done locally - a push is required to put it on remote servers)

- Push and Pull (inter-developer direct exchange, no need to pass via central server)

- Branching and Merging (amazing for this aspect - automatic merging tool which was required because of the so many Linux kernel contributors) - based on the if it hurts, do it more often!

Why Hudkins?

- CI

- Open Source

- Large community

- Lots of plugins

Why Gerrit?

- Code reviews for GIT (line by line annotations)

- Open Source

- Permit to build a Workflow in the system

- Access Control (originally created to manage Android contributions)

Why Mylyn?

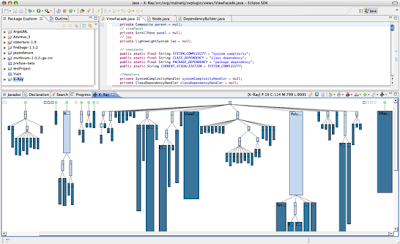

- 90% of IDE's content is irrelevant... (see image) - (lack of integration, information overload, context-loss when multi tasking or when exchanging tasks with another developer)

- Open Source

- Part of Eclipse 99% of the time

- NOTES: Other IDEs supported? Seems not. Apparently, it has performance issues (Eclipse users seem to disable Mylyn right off the bat...)

Pie chart of a developers's day

see preso image, but essentially, a typical developer takes 34% of his day reading/writing code, 10% testing code and the rest of the day producing nothing significant (including a 22% for pure interruptions inefficiencies)

Why Mylyn part 2?

- Mylin can track all you do within a task (what classes you changed, the web pages you access, etc.)

- Ex: A guy goes on vacation; you can by reopening the task to complete it in his place, access the context that actually follows the tasks and thus see the relevant classes, web pages, etc.

- Task focused interface (task system in the background - like JIRA, Bugzilla, etc.)

- Over 60 ALM tools supported

Contribution Workflow

See image from preso

Before: Patch based code reviews

- essentially a back and forth thing between contributors and intergrators (code did not compile, then a test did not pass, etc.) - typically, it used to take approximately 2 days to integrate a piece of code into one of the open source projects listed above

- Limited automation

- Difficult to trace changes

- cumbersome process

- Late feedback

- Code pushed to Gerrit (which acts as a GIT repository - with code review automation)

- Code changes then pulled from Hudson, build is done and Hudson vote back to Gerrit based on all required gates passed or not (build OK: vote +1, tests OK: vote +1, etc.); if a step fail, data flows back into developer IDEs mentioning for example that a certain test failed, etc. the feedback is almost instantaneous.

Demo: Collaboration

A complete demo was conducted to show the previously discussed tools integration. Very interesting even though it was Eclipse-based ( ;-) )

NOTE on Business Processes

It is possible to model business processes at Gerrit level. For example, all developers could have access rights to commit to Gerrit, but only a certain predefined user can commit to the central repository.

Awesome! How do we do it at home now?

Setting up the server (in that order)

- Install all the softwares and then:

- Clone GIT repository

- Initialize Gerrit

- Register and configure a Gerrit human user

- Register and configure a Build user (the automating user)

- Install Hudkins Git and Gerrit plugins

- Configure standard Hudkins build

- Configure Hudkins Gerrit build (integrate the review within the build)

Setting up the client (contributor - in that order)

- Install EGit, Mylyn Builds (Hudson), and Mylyn Reviews (Gerrit)

- Clone GIT repository (pull)

- Configure Gerrit as remote master

- There is no step 4! Done.

Setting up the client (committer - in that order)

- Configure a Mylyn builds Hudson repository

- Configure a Mylyn review Gerrit repository

- Create a task query for Gerrit

- There is no step 4! Done.

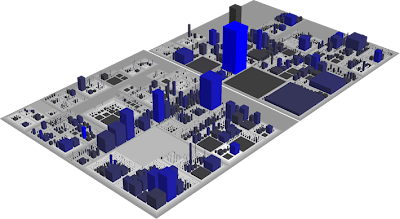

Visualizing Software Quality - Visualization Tools

This is the second part of a previous post found here. If you haven't done so yet, go read it. Now, as promised, here is a summary list of the tools which can be used to visualize various aspects of software quality. I will provide a short description for each kind of visualizations and for more detail, I strongly recommend you go visit Erik's blog at http://erik.doernenburg.com/.

For all of the following types of software quality visualization charts or graph, you must first collect and aggregate the metrics data. This can be done using static analysis tools, scripts or even Excel. What is important is defining what you want to measure, finding the best automated way to get the data (how) and aggregating the necessary measures to produce the following views. Each of the following views have different purposes however, they are all very easy to understand and give you a very simple way to see the quality of your software.

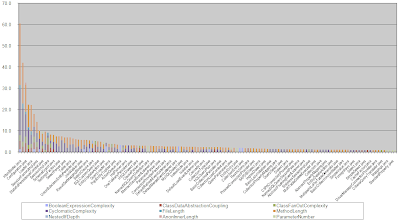

Toxicity Chart

The toxicity chart is a tool which can be used to visualize the internal quality of your software, more specifically, it can show you how truly horrible your code is. In the toxicity chart, each bar represents a class and the height of each bar is a composition of metrics showing you how toxic the class is. So, the higher the bar, the more horrible the class is. It can be an effective way to represent poor quality to less technically inclined people (e.g. management types).

An example of a metric which composes the bar could be the length of a method. To generate this measure, you must define a threshold for the specific metric which would then produce a proportional score. For example, if the line-of-code threshold for a method is 20 lines and a method contains 30 lines, its toxicity score would be 1.5 since the method is 1.5 times above the set threshold. For more details on the toxicity chart, read the following article.

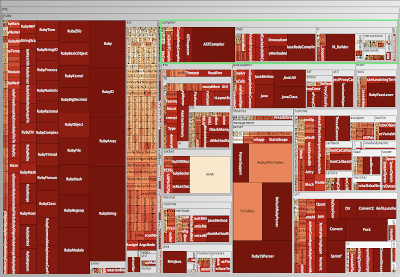

Metric Tree Maps

A metrics tree map visualizes the structure of the code by rendering the hierarchical package (namespace) structure as nested rectangles with parent packages encompassing child packages. (Excerpt taken from Erik's blog). For more details on the metric tree maps, go read the following article.

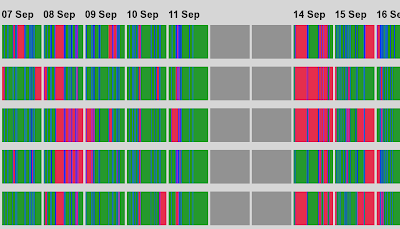

Visualizing Build Pain

The following graph shows a series of "build lines" representing the state of builds over time. Each build gets a line. This is very useful in continuous integration environments as it gives you a high level view of build related problems. Colour is used in the following way: green for good, red for broken, and blue for building. So, if you set this up on a big screen in a highly visible area of your office, people will know very quickly when many problems arise. As an added bonus, keeping the build lines from past days will give you an idea of how well the development teams have reduced the broken builds from the past. For more details on the build line graphs, go read the following article.

System Complexity View

System Complexity is a polymetric view that shows the classes of the system, organized in inheritance hierarchies (excerpt from Moose Technology). Each class is represented by a node:

CodeCity 3D View

Finally, this type of visualization has to be the most awesome way to look at your software. CodeCity is written by Richard Wettel and is an integrated environment for software analysis, in which software systems are visualized as interactive, navigable 3D cities. The classes are represented as buildings in the city, while the packages are depicted as the districts in which the buildings reside. The visible properties of the city artifacts depict a set of chosen software metrics (excerpt from Moose Technologies). For more details on this, you can also go read the following article.

For all of the following types of software quality visualization charts or graph, you must first collect and aggregate the metrics data. This can be done using static analysis tools, scripts or even Excel. What is important is defining what you want to measure, finding the best automated way to get the data (how) and aggregating the necessary measures to produce the following views. Each of the following views have different purposes however, they are all very easy to understand and give you a very simple way to see the quality of your software.

Toxicity Chart

The toxicity chart is a tool which can be used to visualize the internal quality of your software, more specifically, it can show you how truly horrible your code is. In the toxicity chart, each bar represents a class and the height of each bar is a composition of metrics showing you how toxic the class is. So, the higher the bar, the more horrible the class is. It can be an effective way to represent poor quality to less technically inclined people (e.g. management types).

|

| Toxicity Chart |

An example of a metric which composes the bar could be the length of a method. To generate this measure, you must define a threshold for the specific metric which would then produce a proportional score. For example, if the line-of-code threshold for a method is 20 lines and a method contains 30 lines, its toxicity score would be 1.5 since the method is 1.5 times above the set threshold. For more details on the toxicity chart, read the following article.

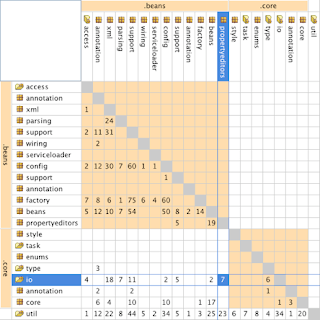

Dependency Structure Matrix

The following matrix is used to understand structure by showing dependencies between groups of classes such as packages (in Java) or namespaces (in C#). The resulting table can be used to uncover cyclic dependencies and level of coupling between these groups. For more details on the DSM, go read the following article.

|

| DSM |

Metric Tree Maps

A metrics tree map visualizes the structure of the code by rendering the hierarchical package (namespace) structure as nested rectangles with parent packages encompassing child packages. (Excerpt taken from Erik's blog). For more details on the metric tree maps, go read the following article.

|

| Metric Tree Map Polymetric View |

Visualizing Build Pain

The following graph shows a series of "build lines" representing the state of builds over time. Each build gets a line. This is very useful in continuous integration environments as it gives you a high level view of build related problems. Colour is used in the following way: green for good, red for broken, and blue for building. So, if you set this up on a big screen in a highly visible area of your office, people will know very quickly when many problems arise. As an added bonus, keeping the build lines from past days will give you an idea of how well the development teams have reduced the broken builds from the past. For more details on the build line graphs, go read the following article.

|

| Build Lines |

System Complexity is a polymetric view that shows the classes of the system, organized in inheritance hierarchies (excerpt from Moose Technology). Each class is represented by a node:

- width = the number of attributes of the class

- height = the number of methods of the class

- color = the number of lines of code of the class

|

| System Complexity Polymetric View |

CodeCity 3D View

Finally, this type of visualization has to be the most awesome way to look at your software. CodeCity is written by Richard Wettel and is an integrated environment for software analysis, in which software systems are visualized as interactive, navigable 3D cities. The classes are represented as buildings in the city, while the packages are depicted as the districts in which the buildings reside. The visible properties of the city artifacts depict a set of chosen software metrics (excerpt from Moose Technologies). For more details on this, you can also go read the following article.

|

| CodeCity Polymetric View |

Lesson Learned from Netflix in the Global Cloud [UPDATED]

Simply put, if you want to do Cloud, don't try to build one, find the best possible infrastructure web services platform in the could out there and use it! I'll try to give you a glimpse of what Adrian Cockcroft form Netflix presented this morning but omit the technical details as I will post the presentation link later in an updated version of this post.

So, is your company thinking of being in the Cloud or has it started trying to build? Only time will tell which path will #win, however, know that if you want to make the move now, AWS is readily available to anyone. A lot of the content from this presentation go into the technical details of their platform architecture on AWS and I invite you to come back to this post later today for a direct link to the presentation as it becomes available. [UPDATED: Slides Link]

In the meantime, check out The Netflix "Tech" Blog for some discussion on the subject.

If you don't know about Netflix, you might have been living under a rock for the past couple of years but just in case, Netflix provides services for watching TV and movies online to over 20 million users globally across multiple platforms most of which can instantly stream the content over the Internet. When Netflix decided to move its operation to the cloud, it decided to use a public Cloud. Why use the public Cloud you ask? Well... Netflix just couldn't build data centres fast enough and has decided to search for a robust and scalable Cloud offering to develop their platform on. The choice was set on Amazon Web Services (AWS). Partnering with AWS has allowed Netflix to be ~100% Cloud based! Some smaller back end bits are still not moved to the Cloud however, they are aggressively pushing to change that. The simple rational behind it all was that AWS has dedicated infrastructure support teams which Netflix did not, so the move was a no brainer.

|

| Netflix in the Cloud on AWS |

So, is your company thinking of being in the Cloud or has it started trying to build? Only time will tell which path will #win, however, know that if you want to make the move now, AWS is readily available to anyone. A lot of the content from this presentation go into the technical details of their platform architecture on AWS and I invite you to come back to this post later today for a direct link to the presentation as it becomes available. [UPDATED: Slides Link]

In the meantime, check out The Netflix "Tech" Blog for some discussion on the subject.

Dart, Structured Web Programming by Google

Gilad Bracha, software engineering from Google, is presenting an overview of Dart, a new programming language for structured web programming. Code example links are posted at the end.

Dart is a technology preview, so this it open to change and will definitely change. On today's Web, developing large scale applications is hard. It is hard to find the program structure, there is a lack of static types, no support for libraries, tool support is weak and startup performance is bad. Innovation is Essential. Dart tries to fills a vacuum; the competition is NOT JavaScript… but fragmented mobile platforms are. So, what is Dart?

You can try Dart online at: http://try.dartlang.org. Dart has a different type checker. In other programming languages, a conventional type-checker is a lobotomized theorem prover, i.e. it tries to prove the program obeys a type system. If it can't construct a proof the program is considered invalid; "Guilty until proven innocent". In Dart, you are "Innocent until prevent guilty".

In Dart, types are optional (more on optional types). During development, one can choose to validate types but by default, type annotations have no effect and no cost… the code runs free!

There are no threads in Dart. The world has suffered enough with threads. Dart has isolates. They are actor-like units or concurrency and security. Each isolate is conceptually a process, nothing is shared and all communication takes place via message passing. Isolates support concurrent execution.

On Dart performance, when translated to JavaScript, it should be at parity with handwritten JavaScript code. On the VM, it should be substantially better.

In conclusion, Dart is not done yet. Every feature of every programming language out there has been proposed on the Dart mailing list, however, Google has not taken any decision on these yet. By all means, go check out the preview, try it out and participate!

Some code examples

You can learn more about Dart at http://www.dartlang.org/

|

| Gilad Brache on Dart |

Dart is a technology preview, so this it open to change and will definitely change. On today's Web, developing large scale applications is hard. It is hard to find the program structure, there is a lack of static types, no support for libraries, tool support is weak and startup performance is bad. Innovation is Essential. Dart tries to fills a vacuum; the competition is NOT JavaScript… but fragmented mobile platforms are. So, what is Dart?

- A simple and unsurprising OO programming language

- Class-based single inheritance with interfaces

- Has optional static types

- Has real lexical scoping

- It is single-threaded

- Has a familiar syntax

You can try Dart online at: http://try.dartlang.org. Dart has a different type checker. In other programming languages, a conventional type-checker is a lobotomized theorem prover, i.e. it tries to prove the program obeys a type system. If it can't construct a proof the program is considered invalid; "Guilty until proven innocent". In Dart, you are "Innocent until prevent guilty".

In Dart, types are optional (more on optional types). During development, one can choose to validate types but by default, type annotations have no effect and no cost… the code runs free!

There are no threads in Dart. The world has suffered enough with threads. Dart has isolates. They are actor-like units or concurrency and security. Each isolate is conceptually a process, nothing is shared and all communication takes place via message passing. Isolates support concurrent execution.

|

| Dart Execution |

On Dart performance, when translated to JavaScript, it should be at parity with handwritten JavaScript code. On the VM, it should be substantially better.

In conclusion, Dart is not done yet. Every feature of every programming language out there has been proposed on the Dart mailing list, however, Google has not taken any decision on these yet. By all means, go check out the preview, try it out and participate!

Some code examples

- Hello World - http://try.dartlang.org/s/bk8g

- Generics - http://try.dartlang.org/s/dyQh

- Isolates - http://try.dartlang.org/s/cckM

You can learn more about Dart at http://www.dartlang.org/

Friday's Plan

Here is today's plan following the keynote. Don't hesitate to give your feedback or start a discussion in the comments.

Myself:

- Netflix in the Global Cloud - link

- Realtime Web Apps with HTML5 WebSocket and Beyond - link

- NoSQL Applications Panel - link

- On track for the cloud: Building a hybrid cloud at Canadian Pacific - link

- The Architecture of Oracle's Public Cloud - link

Steve 2.0:

- Automating (almost) everything using Git, Gerrit, Hudson and Mylyn - link

- DVCS for agile teams - link - cancelled and replaced with Building an ecosystem for hackers - link

- Rearchitecting amazon.com for the Cloud - link - changed to HTML 5 Design and Development Tooling - link

- NetApp Case Study - link

- Integrating SaaS applications with Mule iON - link

Thursday, November 17, 2011

Visualizing Software Quality - Metrics & Views

A recurring theme in presentations at QCon SF 2011 is the use of visualization systems. This morning, I attended a presentation entitled Software Quality – You know It When You See It. It was presented by Eric Doernenburg from ThoughtWorks and will most definitely appeal to all the software QA buffs out there. This topic has a lot of content so I will spread my notes across multiple posts. Eric first posed the following question:

In this post series, I will cover all three of these steps by presenting examples of available tools used to visualize software quality however, before we can do that, we must observe the software and firstly collect a few metrics.

The 30000 ft Level View

At this height (or at any arbitrarily greater height) we have a view on software that is very macro. We see component diagrams, high level architecture, compositions, deployment diagrams, etc... Not very useful from a quality perspective because we don't have enough detail to properly measure the quality of the software being observed; A lot of abstraction layers exist between these high level views and the actual system. Also, it is very common that what the architect dreamt up versus the actual production code are two very separate beasts.

The Ground Level View

At this level, one can spot and address many quality issues at the line-of-code level. This might seem relevant at first but consider this level might be a little too micro to be efficient. Visualizing quality at the line-of-code level can easily overwhelm us with too much detail.

The 10000 ft Level View

Think of this view a being just at the "right level". At 10000 feet, there is not too much detail and the observable components have enough detail which make them prime for quality visualization.

to be continued in another post...

"How can you see software quality or non-quality?"Well, as it turns out, there are many ways this can be done. Simply put, the steps are fairly simple and straightforward:

- Collect metrics

- Aggregate data

- Render graphics

In this post series, I will cover all three of these steps by presenting examples of available tools used to visualize software quality however, before we can do that, we must observe the software and firstly collect a few metrics.

Types of Metrics

What types of metrics exist which can be used to me sure software quality? We have the lines of code (LoC), method length, class size, cyclomatic complexity, weighted method per class, coupling between objects or classes, amount of duplication, check-in count, testing coverage, testability and test-to-code ratio only to name a few. Data collection can be mostly automated and tools exist out there to do this. For examples, research tools such as iPlasma (see reference paper) provide such capability for extracting a range of different software metrics. This is the first step in producing a visual representation but before going into more detail on visualization tools, lets now take a look a some viewpoint metaphors on software quality which present the problem of "what" should be observed.

The 30000 ft Level View

At this height (or at any arbitrarily greater height) we have a view on software that is very macro. We see component diagrams, high level architecture, compositions, deployment diagrams, etc... Not very useful from a quality perspective because we don't have enough detail to properly measure the quality of the software being observed; A lot of abstraction layers exist between these high level views and the actual system. Also, it is very common that what the architect dreamt up versus the actual production code are two very separate beasts.

The Ground Level View

At this level, one can spot and address many quality issues at the line-of-code level. This might seem relevant at first but consider this level might be a little too micro to be efficient. Visualizing quality at the line-of-code level can easily overwhelm us with too much detail.

The 10000 ft Level View

Think of this view a being just at the "right level". At 10000 feet, there is not too much detail and the observable components have enough detail which make them prime for quality visualization.

to be continued in another post...

Mike Lee on Product Engineering

This is a follow-up post on last night's keynote. If you have about 47 minutes to spare, I highly recommend you have a look at the following presentation (link) on InfoQ given by Mike Lee at the Strange Loops conference on November 10, 2011. We had the encore at QCon SF last night. Enjoy!

Things I Wish I'd Known

Rod Johnson (background info) of SpringSource discusses some things he wish he'd known when he ventured on the business (dark) side of things with his start up.

|

| Rod Johnson |

Most software developers are quite good with technology; for this reason, Rod's keynote focused more on business than technology. In business, especially in start ups, there are some intense highs (e.g. financial success, changing the word) but equally painful lows (e.g. layoffs, not taking a salary, years of obsessive).

So, if your planning to do a start-up, you need to ask yourself: where is the opportunity? Remember that technology must come first! There must be an opportunity for disruption. Where is there a market gap? Did everyone else fail to see something? Did someone else screw up?

Sometime, the question are complicated and the answers are simple. Try to prove yourself wrong. Be your toughest critic. Why is there a business here? Is it less painful to abandon a thought experiment than a business? There will always be a better idea.

Do NOT start writing code... it's addictive if you're any good at it.

Once you've validated your business idea, believe it! Vision must matter to you at an emotional level. Align you team on the vision. Unless someone like you cares a whole awful lot, nothing is going to get better. Just think of Steve Jobs. Are you prepared for success or failure? Success means years of obsession which has a big impact on family, friends and hobbies. You lifestyle can be reduced compared to a normal job. And what about failure? There are ranges of outcomes. At best, improving a personal brand or at worst, significant financial impact and wasted years.

Being an entrepreneur means a lot of risk. Many successful entrepreneurs have repeated failures but have bounced back. You can and will get things wrong. Some you can get away with for awhile since you can't get everything right the first time. So long as you are ready to change, this will help you. Some things, however, you cannot get wrong such as legal aspects of a business.

A great team makes a great company; This is what investors are looking for. Building a team is about complementary skills. First, you need to understand yourself and know what things you are good at and what things you suck at. Assemble individuals who possess the required skills and agree on your vision and ambition. Remember that you cannot afford to have different goals. In essence:

"We’re all a little weird. And life is weird. And when we find someone whose weirdness is compatible with ours, we join up with them and fall into mutually satisfying weirdness—and call it love—true love." - Dr. Seuss

On investment, if you're starting a software company, you probably think you need more money than you think. A great investor will lift you to another level. A poor investor won't help and will exploit you. You will be married to your investors so choose wisely. All investors are not equal. A good trick to pick out good investors is due diligence. Find out a bit more on the person you want to do business with before signing a the contract.

Finally, a few mistakes you shouldn't do:

- Don't piss people off, it will come back to bite you

- Don't let customers drive your product roadmap

Cloud-Powered Continuous Integration and Deployment

The speaker comes from Amazon and wants to give us advice on Continuous Integration (CI) and Continuous Delivery (CD) - in the cloud. In the end, I only participated in the first half of his presentation that contained CI basic stuff. When it would have been interesting, the speaker started to be really Amazon EC2 centric... not moch to retain from this session, maybe except the Poka yo-ke technique (see below).

At amazon, the Customer is the center of their universe

Old way was to flow from Requirements to Development, Check-in to Testing and QA to Release. Most of the learning from customers is at the end at the Release phase and a little bit on Requirement phase but pretty much none the middle phases so to learn faster, the goal is to make the inner steps smaller and apply CI, CD and Continuous Optimizations

CI

- Goal: to have a working state of the code at any point in time

- Benefit: fix bug earlier when they are cheaper to fix

- Metric: a new guy can checkout and compile on his first day on the job

Poka yo-ke technique

See here: A poka-yoke is any mechanism in a lean manufacturing process that helps an equipment operator avoid (yokeru) mistakes (poka). Its purpose is to eliminate product defects by preventing, correcting, or drawing attention to human errors as they occur.

Ex: ATM machines with card swipers designed so you keep you card at hand instead of those that "eat" your card offering you a way to possibly forget to take it back when you leave the ATM.

Basic lessons

- Keep absolutely everything in version control (scripts, etc.)

- Commit early, commit often

- Always checkin to trunk, avoid branching

- take responsibility for check ins breaking the build

- automate the build, test, deploy process

- be prepared to stop the mainline when breaking occurs

- only one way to deploy and everybody uses the same way

- be prepared to revert to previous versions

Cloud

VCS systems in the cloud, CI servers in the cloud, distributed build in the cloud

They use Jenkins, Team city, cruise control, bamboo

Uptime in High Volume Systems - Lessons Learned

- Hosting for mobile services

- Unified API for services across platforms

- Content delivery at all scale

- SLAs for throughput, latency

- Apple, Android, RIM

- Specifically Lean startup

- From wikipedia: Use of FOSS and employment of Agile Techniques; is a "ferocious customer-centric rapid iteration company"

- Attention to Continuous Improvement

- Value the elimination of waste

- Transparent, open processes

- Does not apply to just Engineering but also to Operations

- more than 20K developers

- 300 millions active applications installs use our APIs across

- more than 170 millions unique devices

- 10s of billions of API requests per month

- 10 millions direct socket connections to our servers

- more than 50TB worth of analytic data

- 30 software engineers, 5 operations engineers

3-tier architecture (using Apache Cassandra, PostgreSQL, Java, Python, HDFS, Hbase big user (like Facebook))

Architecture - General principles

Architecture - Services

Trending towards a service based architecture

Critical traits of a services

Architecture - fault domains

essentially, they worked hard to ensure that when 2 resources are completely unrelated, they should are isolated fault domains if at all possible.

Engineering for iteration

Engineering for automation

3 level of testing (Unit, Functional (with mocks)and Integration). Commits are done to a single main git branch

Engineering for simplicity

They simplified metrics and stats capture and so they can do it everywhere!

Engineering for Responsiveness

Engineering for Availability

Engineering for Continuous Improvement

They use the 5 whys approach

Operations for Transparency

They measure absolutely everything, and monitor only the important things

- Keep everyone moving in the same direction

- Help discrete teams understand how they interact

- Think in terms of small discrete services

- Continuous capacity planning based on real data

- Avoid local optimization decision making

- Minimal exposed functionality (Smallest reasonable surface area to the API and Operate on one type of data and do it well)

- Simple to operate

- Over exposure of metrics and stats

- Discoverable via ZooKeeper (future)

- Zero visibility into inner workings of other services

- No shared storage mechanisms across services (services are completely fronting their datasets) - Motivation for this: security, performance, scalability

- Minimize shared state - use ZooKeeper if absolutely necessary

- Consistent logging and configuration properties

- Consistent implementation idioms

- Consistent message passing

- Convention for on-disk layout and structure (directory structure is standard on all their nodes)

Architecture waste reduction

- All back-end services are in Java and Python

- All Java services are made to use a single set of operational scripts

- Always looking for new ways to eliminate waste

- Architectural waste comes in many forms (lots of data storage engines - postgreSQL, MongoDB, Cassandra, Hbase, using a complex, unfamiliar queuing system was wasteful, large diversity in approaches for managing services, worker processes, process management, etc.

- Developer silos - avoid the bus factor - they always have at least 2-3 persons per service (and they currently have 35-40 services)

Engineering at UA

- 46% of the time they develop new features

- 28% spent sustaining internal support

- 21% production support

- 2% - social stuff (beer , ping-pong)

- Team of about 30 engineers

- Small teams organized around functional area (DB guys, etc.)

- Shortest iterations possible - Lean MVP concept (Minimum Viable Product)

- No formal QA team (!) - and they seem to be happy with giving this responsibility to all their people.

- Frequently pairing, but not mandatory - this is the choice of the developers themselves

- They always leave code better than how they found it

- All bug fixed requires a code review in a review board

- Large new developments require a sit-down code/design review (a little more formal, but not that much)

They capture latency for Service critical operations and External service invocation

They capture counters for Service critical operations and Services faults

Engineering for Operations

- Tests and Deployment scripts are done within the development teams (their definition of done essentially includes the deployment automation).

- Services deployments done via automation tools and

- Automation scripts always pull from prod git branch after passing auto and manual tests

- They apply the "Put the mechanics on the helicopter" principles

- Low latency, high throughput message paths using an in-house developed RPC system based on Netty and Google PBs.

- They support sync and async clients, journaling of messages

- Latency tolerant message paths using Kafka for pub-sub messaging (they generally favor pub-sub model)

- They use dark launch (like Facebook) which essentially is a roll out of a new functionality to a subset of customers

- Take new service in or out of prod with no customers impact (Double writes, single reads, migration , cutover, Load balanced http with blended traffic to new and old service

- Their service abstraction helps immensely

- Requires extra discipline for co-existing versions or services

Operations at UA

A team of 5 operations engineers handling more than 100 servers (Mostly bare metal, using EC2 for surge capacity)

See the slides here: TBD

Retrospectives, Who Needs Them, Anyway?

Book references:

Essentially it is a chance to reflect and learn (historically called postmortem made to learn from failures)

Norman Keirth (Project Retrospectives: A Handbook for Teams Reviews author) Prime Directive

"regardless of what we discover, we must understand and truly believe that everyone did their best job he or she could, given what was known at the time, his/her skills and abilities, the resources available, and the situation at hand."

What happens during retros - the 5 steps

- Set the stage - getting ready (ex: create safety, I'm too busy)

- Gather data - the past (ex: artifacts contest, Timeline)

- Generate insigths - now (ex: fish-bone, 5 whys, patterns and shifts)

- Decide what to do - the future (ex: Making the magic happen, SMART goals)

- Closing the retro - retro sumary (ex: what helped, what hindered, delta)

Stories from the trenches

- We should never impose retros on people not wanting retros... it never works!

- There is a difference between retros and discussions at the cafeteria: for retros, you have (or need!) shared commitment to find solutions.

- Serves to celebrate good things as well.

- Serves as points to evaluate where we are going

Esther Derby's (Agile Retrospectives author) findings

retros can be :

- boring

- painful (ex: finger-pointing) to the point people want to stop them

those 2 issues come from a lack of :

- focus

- participation (thus the need to set the stage properly)

- genuine insight (you can't only do start/stop/cont because they only show symptoms... what is the root cause behind the symptoms)

- buy-in (takes a genuine facilitator - never the team leader - has to be neutral to the team, ideally from another team)

- follow-through (followup is key otherwise, this is a lost of time)

More stories

- 30 minutes is the shortest retros possible (for a team that knows exactly what to do) - typically, minimum of an hour.

- Finger pointing is a retrospective killer... a key to get out of this pattern is to change subject

- Ground rules are really useful to put constraints on how retros are conducted (no naming no blaming rule for example - interpersonal issues shall not be addressed in retros but by management in parallel)

No naming, no blaming!

people, like kids, respond in much better ways to positive comments than negative comments. Negative comments leads to bad team work and eventually bad results! See the Prime directive again and how it actually helps with this essential ground rules.

Identify changes

It is not enough to reflect, you need to take actions to change things. Use SMART goal and name a responsible to follow up on each goal:

Specific

Measurable

Attainable

Relevant

Timely

You start the next retros by asking how we are doing with regards to previously set SMART goals.

Don't "sell" retros, instead

sell a way of learning how to:

- avoid repeated mistakes

- identify and share success

Take away:

If you leave out a step in retrospectives, you will make problems emerge that you do not understand fully nor find solutions to.

And It All Went Horribly Wrong: Debugging Production Systems

Intro: Maurice Wilkes quote:

"As soon as we started programming, we found out to our surprise that it wasn't as easy to get programs right as we had thought. Debugging had to be discovered. I can remember the exact instant when I realized that a large part of my life from then on was going to be spent in finding mistakes in my own programs."

Debugging through the ages :

Production systems are more complicated (abstraction, componeurization, etc.) and less debuggable! When something goes wrong, it is all opaque and more and more difficult to fix...

single component failures still has significant costs (both economic and run-time)... but most dangerously, a single component failure puts the global system in a more vulnerable mode where further failures is more likely to happen... This is a cascading failure - and this is what induces failures in mature and reliable systems.

How have we made it this far?

- we architect ourselves to survive component failure

- we forced ourselves to stateless tiers

- when there are states, we considered semantics (ACID, BASE) to increase availability

- redundant systems

- clouds (especially unreliable ones, like Amazon) have expended the architectural imperative to survive data-center failure

Do we still need to care about failure?

single component failures still has significant costs (both economic and run-time)... but most dangerously, a single component failure puts the global system in a more vulnerable mode where further failures is more likely to happen... This is a cascading failure - and this is what induces failures in mature and reliable systems.

Cascaded failure example 1:

An example of a bridge that collapsed in Tampa Bay because of a boat with ballasts full (which was not supposed to happen) hit the bridge (which was not supposed to happen either) that had been built by a crooked contractor playing with sand/cement ratio in concrete to save money (which was not supposed to happen either - after all, this is Florida, not Quebec!). In the end, it took all those "unlikely" events to all occur to cause the bridge to fall.

Wait, it gets worse

- this assumes that the failure is fail stop

- if failure is transient a single component failure can alone induce system failure

- monitoring attempts to get at this by establishing liveness criteria for the system - and allowing operator to turn transient failure into fatal failure...

- ... but if monitoring becomes too sophisticated or invasive, it risks becoming so complicated as to compound failure.

Cascaded failure example 2:

An image of 737 rudder PCU schematic (details here). Another example of a cascaded failure that led to B737 landing issues.

Debugging in the modern era

- Failure - even or a single component - erodes oeverall reliability system

- When a single component fails, we need to understand why and fix it

Debugging fatal component failure

- when a component fails fatally, its state is static and invalid

- by saving the state, to stable storage, in DRAM for example, the component can be debugged postmortem

- one starts with the invalid states and proceeds backward to find the transition from a valid state to an invalid one

- this technique is old: core dumps

Postmortem advantages

- no run-time systems overhead

- debugging can occur anytime, in parallel of the production system

- tooling can be very rich since the overhead caused to a run-time system is not a problem

Cascaded failure example 3:

Flight Data Recorder from Air France crash (747) found 1.5 years after the crash

This recovery definitely permitted postmortem analysis

Postmortem challenges

- need the mechanism for saving state on failure (ex: core dumps)

- must record sufficient state (program text + program data)

- need sufficient state present in DRAM to allow for debugging

- must manage state such that storage is not overrun by a repeatedly pathological system

these challenges are real but surmountable - as in some open source systems presented below (MDB, node.js, DTrace, etc.)

Postmortem debugging: MDB

- a debugger in illumos OS (solaris derivative)

- extensible with custom debugger module

- well advanced for native code but much less for dynamic envirnoments such as Java, Python, Ruby, JS, Erlang...

- if components going into infrastructures are developed using those languages it is critical that they support postmortem debugging.

Postmortem debugging: node.js

- not really interesting for non JAVA...

- debugging a dynamic environment requires a high degree of VM specificity in the debugger...

- see all details on dtrace.org/.../nodejs-v8-postmortem-debugging

Debugging transient component failure

- fatal failures, despite its violence, can be root-caused from a single failure

- non fatal failure, it is more difficult to compensate for and debug

Reliability Engineering Matters, Except When It Doesn't

A presentation on Reliability Engineering (RE) and how it can be used for software systems. Quite a confusing presentation doing a full circle from

- RE is good for many domains including software to

- RE is very complex for software systems to

- But it is still a tool that you can use, not just an absolute one...

Presentation content:

This book written by the presenter was introduced by the host as an excellent book on the subject: "Release It!" by Michael T. Nygard

Presentation slides here.

Presentation slides here.

Context and example

Reliability Engineering (RE) is a lot about maths so the speaker tried to present concrete examples. What if the hotel bar lighting rack falls on happy drinkers (ex: Pascal)? We could analyse the supporting chain and everything using static & mechanics principles. This is fine, but this abstracts other possibilities affecting the reliability such as earthquakes, beam wearing out, drunk people hanging on it occasionally, etc. or we can analyse this from another perspective: The rack held properly yesterday, today is a lot like yesterday, so the rack will be OK today again... but the point is that it will for sure eventually break and this is what RE is about.

RE Maths

The presenter went through many mathematical models with hazards equations, fault density, etc. Those came from many disciplines from which RE software inspires itself, and in which the probability of failures augments with time; but software do not really wear out... so we cannot simply apply blindly those models.

By taking a single server example and then a multiple servers example, he presented the Reliability Graphs (http://en.wikipedia.org/wiki/Reliability_block_diagram) where there is a start node and an end node and everything in between are successful reliability paths. A single path is subject to a global system failure if any subsystem fails.

3 Types of failures

- Independent failure: Failure of one unit does not make another unit more likely to fail (excellent! but is that true for software systems? not likely)

- Correlated failure: Failure of one unit makes another unit more likely to fail.

- Common mode failure: Something else, external to the measured system, makes 2 redundant units likely to fail (ex: redundant LEDs in an Apollo capsule that were both subject to overheating)

Important Note for RE when applied to software systems:

Lots of software reliability analysis make the error of assuming a perfect independence between duplicated resources. The 2 last types of failures are way more common.

Blabla you should probably skip unless you know Andrey Markov (the mathematician)...

The speaker then went through more real-life examples, mainly to lead us to the various pitfalls of formal analysis with regards to software reliability. He showed that because of Load Balacing algorithms for example, redundant systems were not independent and that a failure on one was certainly augmenting the probability of failure on other ones (receiving the load of the failed ones in this example). Also, if you run a system with 9 servers, it is certainly because you need most of them to be alive for the system to work (otherwise, you overbuilt your system...); he introduced the concept of what is the minimum number of systems required to be alive out of the total number of systems deployed. Interestingly, one of the pitfall in increasing reliability seems to be that the fail over mechanisms tend to bring with them their own failure paths... In the end, when he used quite a small system for an example (maybe 5 machines) and put in the "invisible" systems from the logical system block diagram (ex: switches, routers, hard drives, etc.) in the probability of failure equation, the result was no less than spectacular (I can't write this equation down!). But it certainly proved again that true independence is not existing most often in software systems. Also, unlike the famous constructions analogies, a failed software system can come back up. To handle that aspect, he introduced the work of this mathematician, Andrey Markov and its Markov model (essentially state diagrams with probabilities of change states on each arcs). Markov systems are awfully complex for the simplest systems...

Limitations of RE with regards to software systems:

- Intractable math (only few systems have closed forms distributions like gaussian - more often they have exponential (ex: a single server), log normal (ex: multiplicative failures) or weibull (ex: hardware) distributions). There is also the "repair" distributions that could be modeled with a Poisson distribution. But in the end, which distributions do we apply to software systems? None: Software fails based on load, not on time (as opposed to typical engineering disciplines)

- Curse of Dimensionnality (you should have seen the Markov model for the simple system)

- Mean Time Between Failure (MTBF) is Bullshit! Note: See Google analysis a few years back on hard drive failures. Would be good to get this data for other devices (servers)

other big killers:

- human error (50-65% of all outages!)

- interiority

- distributed failure modes

- Lack of independence between nodes and layers

So in the end, should we abandon Reliability Engineering for software system?

NO! Even if RE cannot tell you when your system is OK, it can tell you whenit is not. Modeling reduces uncertainty. Use RE like other model and apply it when your system is at risk.

Reference back to the ambivalent presentation title "Reliability Engineering Matters ... Except When It Doesn't".

Thursday's Plan

Here is today's plan following the keynote. Don't hesitate to give your feedback or start a discussion in the comments.

Myself:

Steve 2.0:

Myself:

- Software Quality - You Know it When You See It - link

- Lean Startup: Why It Rocks Far More Than Agile Development - link

- Experiences with Architecture Governance of Large Industrial Software - link

- The DevOps Approach to Performance - link

- Dealing With Performanc Challenges: Optimized Serialization Techniques - link

Steve 2.0:

Impressions of the conference so far...

Ok, day 1 of the conference is done. Now, what can be said about the experience up until now? Well, for one, I think QCon SF is awesome. The organization of such an event can be difficult as there are many people, many speakers, lots of coordination and a bunch of areas where slip-ups can occur but Trifork and InfoQ have got everything under control. The venue layout minimizes walking distance between presentations and the hotel staff is very efficient and courteous, unlike the cable car operators. So, organization-wise, I have no complaints; We are in very good hands.

On the (slightly) down side, as with any such event, no matter how well you pre-plan on which topics you will cover you, will eventually end-up in a presentation that just sucks. Well… maybe the word is a little strong but, lets instead say that some of the presentation content was not in line with the expected return value inferred from the provided abstracts :) This is when having a pre-determined backup presentation plan becomes very handy.

On the brighter side, the organizers have conjured a very innovative way to pick-up feedback after each presentation. They post people at each exit holding one or two iPods with a simple and very intuitive user interface to provide feedback. The screen is divided into three zones with a smily :( , :| or :) associated to red, yellow and green; I'll let you guess which is which. Simply brilliant! Like it? Just touch the happy green smily. Hate it? Touch the red frowny one.

Now, what are two things I take away from this first day at the QCon SF conference?

So, one down and two more to go. We will be posting the expected attendance schedule for day 2 very soon, so keep reading and don't forget to comment on our posts. We'll definitely take the time to answer any questions you may have.

On the (slightly) down side, as with any such event, no matter how well you pre-plan on which topics you will cover you, will eventually end-up in a presentation that just sucks. Well… maybe the word is a little strong but, lets instead say that some of the presentation content was not in line with the expected return value inferred from the provided abstracts :) This is when having a pre-determined backup presentation plan becomes very handy.

On the brighter side, the organizers have conjured a very innovative way to pick-up feedback after each presentation. They post people at each exit holding one or two iPods with a simple and very intuitive user interface to provide feedback. The screen is divided into three zones with a smily :( , :| or :) associated to red, yellow and green; I'll let you guess which is which. Simply brilliant! Like it? Just touch the happy green smily. Hate it? Touch the red frowny one.

|

| Presentation Rating System |

Now, what are two things I take away from this first day at the QCon SF conference?

- To my surprise, I think I have created a blogging monster. Let call him "Steve 2.0". Coming here, we had a choice of documentation mechanisms to bring back information to the mothership: produce a standard (i.e. boring, static, non-interactive) conference report or use a blogging platform that would allow us to take notes and publish content as the day went on. Option B was taken. I'm only hoping that "Steve 2.0" is not a transient state and that he will transpose his newly learned skills to another platform (you know which one…) after all this is over.

- Mike Lee is an awesome presenter. Going through a somewhat tiresome day of active listening, idea exchanging and intensive note taking was well worth the trouble for this guy's keynote. If you do not know who Mike Lee is, here is a little background information. His keynote on Product Engineering was done wearing a mariachi suit which fitted this guy's personality and on-stage presence very well.

I wish I could show you the video of it but as a consolation prize[Update: Here's a video on InfoQ of the same presentation given at the Strange Loop conference], I will link you to his presentation slides.

|

| Mike Lee on Product Engineering |

So, one down and two more to go. We will be posting the expected attendance schedule for day 2 very soon, so keep reading and don't forget to comment on our posts. We'll definitely take the time to answer any questions you may have.

Wednesday, November 16, 2011

Stratos - an Open Source Cloud Platform

Presentation given by WSO2, the producer of Stratos cloud-reated products. Here's some pointers from their presentation. I think this should interest all our people working on SOA implementation.

- Presentation of Platform as a Service (PaaS)

- online data doubles online every 15 months and the number of apps kind of follow the same trend

- what the Cloud is really depends on who you are (ex: online music for teenagers, emails for your mom, prospects for a sales guy, etc.)

Slide 2:

- PaaS (Platform as a Service) is what is positioned between IaaS (Infrastructure as a Service, ex: Amazon) and SaaS (Software as a Service, ex: Google Apps)

- Stratos tries to insert itself in between the hardware and the software platforms.

Slide 3:

What does Cloud native means:

- Distributed/Dynamically wired (i.e. it works properly in the cloud)

- Elastic (i.e. it uses the cloud efficiently)

- Multi-tenant (i.e. it only costs when you use it)

- Self-service (i.e. you put it in the hands of users)

- Granularity billed (i.e. you pay for just what you use)

- Incrementally deployed and tested (i.e. it support seamless live upgrades - Continuous update, SxS, in-place testing and incremental deployment)

Slide 4:

Apply those concepts to an Enterprise architecture… Cloud is no only about Web apps, it is also about Portal, Queues and topics, DB, Registry/Repositories, Rules/CEP queries, Integrations, etc.

Slide 5:

What are the various dimensions to evaluate a PaaS:

- Which languages and APIs does it support? (Are you locked in?)

- Can it run on a private cloud? (Are you locked in?)

- Which services does it offer? (Are you locked in?)

- Is it open source? (Are you locked in?)

Slide 6:

What are the cloud players:

1- Those without private PaaS

- Force.com / Heroku

- Google App Engine

- Amazon Elastic Beanstalk

2- Those with provate PaaS

- Tibco

- Microsoft

- Cloudbees

3- Those with both

- Stratos (obviously...)

- others I missed.

Slide 6:

What is Stratos

- A product: Open source, based on OSGi

- Services (based on the product) called Stratos Live

Then we got a live demo of Stratus Live

For more details, see http://wso2.com/cloud/stratos/

This maybe interesting for App server, ESB, Repositories, etc. seems to be a fairly complete, consistent, integrated portfolio of middleware services.

Here's a list of what they claim their offer in Stratos Live:

- Application Server as a service

- Data as a service

- identity as a service

- Governance as a service (this one is for Pascal!)

- Business Activity Monitoring as a service

- Business Processes as a service

- Business Rules as a service

- Enterprise Service Bus (ESB) as a service

- Message Broker as a service

- etc.

Subscribe to:

Posts (Atom)