Check out Abel Avram's Key Takeaway Points and Lessons Learned from QCon San Francisco 2011 article in which articles from this blog are quoted.

That is awesome!

QCon SF 2011

This blog will be covering my attendance at the QCon 2011 conference in San Francisco, California from November 16 to 18. QCon is a software development conference on many useful topics which I will try my best to summarize through my posts. Please, feel free to post your comments and get some conversation started. Enjoy!

Monday, December 12, 2011

Friday, November 18, 2011

Final Impressions from QCon SF 2011

The past three days have been quite intense and I'm on the verge of a total information overload. All that aside, the QCon San Francisco International Software Development Conference was in my eyes a great success. Me and my work colleague came here on an information seeking mission and I can now say with great confidence that we have achieved our goal. In fact, we might have collected enough data to sift through for the next couple of weeks which will then package that in a form that is consumable in our work environment. The discussions and idea exchange will definitely continue for us but at a more local level. Some of the posts on this blog have been updated with new information as it was make available so don't be shy to re-read some of them. Also, for those would couldn't make to QCon SF in person, don't forget to check-out InfoQ.com in the coming weeks for videos of some of the presentations.

The past three days have been quite intense and I'm on the verge of a total information overload. All that aside, the QCon San Francisco International Software Development Conference was in my eyes a great success. Me and my work colleague came here on an information seeking mission and I can now say with great confidence that we have achieved our goal. In fact, we might have collected enough data to sift through for the next couple of weeks which will then package that in a form that is consumable in our work environment. The discussions and idea exchange will definitely continue for us but at a more local level. Some of the posts on this blog have been updated with new information as it was make available so don't be shy to re-read some of them. Also, for those would couldn't make to QCon SF in person, don't forget to check-out InfoQ.com in the coming weeks for videos of some of the presentations.A few numbers to put things into perspective, at the moment of the writing of this post, we have a count of over 950 page views coming from mostly from North America but also surprisingly from South America (Argentina) and Europe (France, Germany, Austria, United Kingdom, The Netherlands, Belgium and Ireland). I'm guessing the postings on Facebook, Twitter, LinkedIn and Google+ have helped that a little :) These numbers aren't that impressive by Internet standards but is a personal record for the little blogging that I do, #win!

On that note, we have to proceed with a quick extraction of the premises as our flight back home leaves in just a couple of hours. See you back in at the Mothership in Montreal!

|

| The Mothership! |

DevOps to NoOps - cloud APIs (MuleiON)

Presentation content changed to DevOps to NoOps from Integrating SaaS applications with Mule iON

The speaker wen through a discussion on APIs explosion, popular ones, the ones he thinks we should be considering in cloud application and how MuleiON can be used to properly decouple an application from all the cool cloud APIs discussed and presented.

Agenda

- impact of APIs

- top 10 you should be looking at

- integrating with APIs

The impact of APIs

Programmable Web web site follows API proliferation (doubling/tripling every year!)

APIs more and more seen as revenue streams

Architecture change

The traditional 3-tier architecture (client, server, data) is being decomposed so that 3rd party applications can start using middle-tier APIs and the Data-tier changed from a typical DB into SaaS, web services, social media APIs, etc. - the data no longer lives in one place but distributed in the cloud

Technology shift

Traditional Application Environements: Application/Web-App server/DB/OS

New Application Environments: Application/PaaS (in place of Web/App server)/IaaS (in place of DB and OS, ex: Amazon EC2)

Top 10 APIs by usage

- Google Maps

- YouTube

- Flicker

- Amazon eCommerce

- Twilio - Telephony service

- eBay

- Last.fm - Radio service

- Google search

Top 10 cloud APIs - choices from speaker

- Twilio: Telephony/SMS as a service - build text/sms/voice apps really easily (excellent API, takes seconds)

- DropBox: File Sharing as a service - share files between all your devices/friends/coworkers

- PubNub: Publish/Subscribe Messaging as a service - real-time, global messaging for cloud and mobile apps (as about as real-time as the web can get - low latency of about 55ms over web).

- Katasoft: Application Security as a service (now in Private BETA) - user account management, authentication and access control in your application

- AirBrake: Error Management as a service - interesting approach to error management, no need to hit the logs (can be connected to JIRA for example)

- MongoHQ: MongoDB as a service - create MongoDB in seconds

- SendGrid: email as a service - way better than normal email (like gmail) and no need to run an email server

- loggly: logging as a service - manage logs and enables easy search and navigation

- Amazon S3: file system as a service - simple API for storing both large and small files

- xeround (the cloud database): MySQL as a service - direct replacement to MySQL

see slides for all the alternatives/contenders as well.

The API challenge

All APIS are not equal

- Hard to work with different APIs (different approaches, security schemes, data formats, interpretations of REST)

- lots of custom code in your app.

iPaaS: Do not clutter your app

Use MuleiON (integration PaaS) for loose coupling your app to this cool stuff

Your killer app --> integration layer (ex: MuliON) --> cool stuff (Twitter, etc. all that we just listed above)

Benefits

- Configuration approach to APIs

- Handled for you (security, session management, streaming, http callbacks)

- consume and invoke multiple services, retries, error handling

Essentially, decouples and keeps your application code clean

DEMO

NetApp Case Study in Big Data

2 speakers, an Enterprise Architect from NetApp and Principal Architect from Think Big Analytics

Big Data is not really a problem of particular interests for CAE, however, the approach taken to tackle NetApp Big Data problem might be. They use a lot of Open Source systems to tackle their issues.

Presentation slides: not published

Agenda

Presentation slides: not published

Agenda

- NetApp big data challenge

- Technology assessment

- Drivers

AutoSupport Family

- catch issues before they become critical

- secure automated call-home service

- system monitoring and non intrusive alerting

- RMA request without customer action

- enables faster incident management

AutoSupport - Why does it matter?

see slide

Business challenges

Gateways

- Scale out and respect their SLAs

- 600K ASUPs per week

- 40% coming over the week end

- 5% growth per week

ETL

- data parsed and loaded in 15 min.

- only 5% goes in data warehouse, but even then, gwoing 6-8TB per month

- Oracle DBMS struggling

- no easy access to this unstructured data

reporting

- numerous mining request not met currently

Incoming AutoSupport Volumes and TB consumption

- Exponential flat file storage requirement

The Petri Dish

- NetApp has its own BigData challenges with AutoSupport Data

- Executive guidance was to chose the solution that solved our business problem first: independent of our own technologies

- NetApp product teams very interested in our decision making process (the in-house customer petri dish)\

ASUP Next - Proof of concept (POC) strategy

- don't swallow the elephant whole

- Use technical POCs to validate solution components

- Review the POCs results and determine the end state solution

- size the implementation costs using the results of the POCs

- Make vendor decisions based on facts!

Requirements Used for POC and RFP

- Cost effective

- Highly scalable

- Adaptive

- New Analytical capabilities

Technology Assessment

Essentially, they followed the following steps to validate their solutions

- Defined POC Use cases

- Did a Solution Comparison based on their technology survey

- They prototyped

- they benchmarked

AutoSupport: Hadoop Use case in POC

with a single 10 node hadoop cluster (on E-series with 60 disks of 2TB), they were able to change a 24 billion records query that took 4 weeks to load into a 10.5 hours load time and a previously impossible 240 billions query into one that now runs in only 18 hours!

This is why they chose Hadoop!

Solution Architecture

multiple slides on architectures, requirements, considerations.

Physical Hadoop Architecture

see slide for all details on disks, networks, machines, RAM, etc.

All their machines run on RedHat Entrerprise (RHEL) 5.6, using ext3 filesystem

Hadoop Architecture components

Flume: Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data. Its main goal is to deliver data from applications to Hadoop’s HDFS.

- HDFS: Hadoop Distributed File System, is the primary storage system used by Hadoop applications.

- HBase: the Hadoop database to be used when you need random, realtime read/write access to your Big Data.

- MapReduce: a software framework introduced by Google in 2004 to support distributed computing on large data sets on clusters of computers.

- Pig: from Apache, it is a platform for analyzing large data sets that consists of a high-level language for expressing data analysis programs, coupled with infrastructure for evaluating these programs.

Ingestion data flows

3 types of ASUPs (AutoSUPport)

- Actionable

- Weekly logs

- Performance data

10 flume servers for 200GB/hour

less than 1 minute for case creation

Performance benchmarks

see slides

DAS vs E-Series performance benchmark

E-series multi LUN configuration changes improved read-write I/O by 65%

Hadoop Benefits

- Scalability - by decouling computing from the storage

- Improved Performance - improved response time to meet their SLAs (faster than Oracle DBMS onunstructured data abd faster that tradiontal server-based storage

- Enterprise Robustness - grade protection of HDFS metadata

Takeaways

- NetApp assessed multiple traditional DB technologies to solve its Big data problem and determine Hadoop was the best fit.

- HW & SW short and long term costs were key in the decision to move to Hadoop on ESeries

HTML 5 Design and Development Tooling

Changed from "Rearchitecting amazon.com for the Cloud" - had enough Amazon sales speech last time...

New web motion and interaction design tool that allows designers to bring animated content to websites, using web standards like HTML 5, CSS 3, etc.

The Adobe CSS shaders proposals went via W3C (see here)

Kind of amazing how easy it was to develop animated web content applications and to deliver them to multiple devices!

Agenda

- Edge - Motion and Interaction Design Tools

- Webkit contributions - CSS Regions, CSS Shaders

- JQuery Mobile

- PhoneGap

- Flash News

- Live Demo - quite powerful tool (produces Java Script)

Webkit contributions

- allows effects on any elements of the DOM

- Live demo with Chromium (which implemented this spec) using CSS Shaders studio

- wurp, fold effects kind of cool

- works directly on actual HTML content (videos, animations, selections, all still work in the animated pages) - example given with untouched Google maps site embedded in animation some animations/effects

- real cool and easy to use to add artistic elements to standard web content

- shaders not already available - pending standard publication

JQuery mobile + PhoneGap

- can run as an app or withing the browserit creates a native shell with a web view which load the web content or application

- used to publish to android, ios, etc.

- demo showing how to package web content from a browser to IOs by using XCode PhoneGap extensions

- Xcode=Apple's IDE for IOS applications

- saw an IPad simulator showing how the app would look on an actual IPad

- Then an IPhone simulator was only a click away.

- generated application deployable to AppStore for example.

- he then tested in on an actual device instead of than on a simulator

- very easy to test applications for various platforms/devices

- he then tried it on an Android phone (with a produced android app output from Eclipse instead of XCode)

2 problems with what was demoed so far

- multiple IDEs/tools for multiple target platforms (Xcode for IOS, Eclipse for Android, windows, etc.)

- debugging remote html applications when it runs on a device

for the later problem, they developed the Web Inspector Remote tool (called also weinre), similar to web application debuggers such as the one in Chrome, Firebug, etc.)

Flash news

- Adobe painted as non HTML company... which they claim is not the case

- They think Flash and Flex are still useful in certain cases (pear to pear application for examples - ex: game with 2 players, another ex: enterprise application: ) like real-time data streaming, collaboration sessions between multiple users/devices, etc.

Conclusion

Building an ecosystem for hackers

Session previously called "DVCS for agile teams" has been cancelled and changed to "Building an ecosystem for hackers" given by a speaker from Atlassian

The software life-cycle

It goes from ideas, to prototype, to code, to shipping the product and rinse and repeat until your product is bloated and the code begins to stink!

Conventional wisdom

- Users are stupid

- Software is hard

- Supporting software sucks

- We want simple

How can we empower users

- Build opinionated software (if you do not like it, don't buy it - but you are thus building a fence around your product)

- Build a platform they can hack on (you allow you customers to get involved)

Two schools of thoughts

- Product #1: customers are sand-boxed in the environment

- Product #2: customers build against APIs (REST)

Who does #1 well

- Apple

- Android

- Atlassian

- Chrome/Firefox

- WordPress

- Many others - you know it is well done when companies can start living within the ecosystem

How do they do this at Atlassian

- you start with a plugin framework (they adopted an OSGi based plugin system)

- Speakeasy is a framework allowing to create javascripts/CSS and attach them to Atlassian elements (ex: JIRA, etc.) - it runs a little GIT server within permitting to see extensions sources, fork from them, publish updates, etc.

Demo

- Using JIRA - he used a little "kick ass" extension permitting to kill bugs in the agile view.

Pimp it with REST

- Many REST APIs built in Atlassian

- They are built as extensions using the extensions framework using Jersey (Java REST library), which gives a WADL (Web Application Description Language) file.

- The provided SDK tools offers among other things a command line interface to automate all that is needed.

I have built my plugin, now what

The provide the Plugin Exchange platform to exchange plugins (soon, that will support selling plugins like the AppStore)

Hey, we have got a community!

- Atlassian uses contests to have developers start to use developed extensions mechanisms

- They also host code contests, once a year, to encourage people developing new plugins (ex: JIRA Hero)

- They host conferences for developers (ex: AtlasCamp) - online community of good, but face-to-face events are better!

- The fun elements is required for the ecosystem to actually live.

In the end

People want to contribute to your product, but you need to give the ways to do it!

Automating (almost) everything using Git, Gerrit, Hudson and Mylyn

A presentation on how good open source tools can be integrated together to push development efficiency up.

First, here are all the involved tools references

Git (Distributed Version Control System-DVCS tool)

Gerrit (Code Review tool)

Mylyn (Eclipse-based Application Lifecycle Management-ALM tool)

Why GIT?

- DVCS

- Open Source

- Airplane-friendly (all commits can be done offline since in DVCS, commits are done locally - a push is required to put it on remote servers)

- Push and Pull (inter-developer direct exchange, no need to pass via central server)

- Branching and Merging (amazing for this aspect - automatic merging tool which was required because of the so many Linux kernel contributors) - based on the if it hurts, do it more often!

Why Hudkins?

- CI

- Open Source

- Large community

- Lots of plugins

Why Gerrit?

- Code reviews for GIT (line by line annotations)

- Open Source

- Permit to build a Workflow in the system

- Access Control (originally created to manage Android contributions)

Why Mylyn?

- 90% of IDE's content is irrelevant... (see image) - (lack of integration, information overload, context-loss when multi tasking or when exchanging tasks with another developer)

- Open Source

- Part of Eclipse 99% of the time

- NOTES: Other IDEs supported? Seems not. Apparently, it has performance issues (Eclipse users seem to disable Mylyn right off the bat...)

Pie chart of a developers's day

see preso image, but essentially, a typical developer takes 34% of his day reading/writing code, 10% testing code and the rest of the day producing nothing significant (including a 22% for pure interruptions inefficiencies)

Why Mylyn part 2?

- Mylin can track all you do within a task (what classes you changed, the web pages you access, etc.)

- Ex: A guy goes on vacation; you can by reopening the task to complete it in his place, access the context that actually follows the tasks and thus see the relevant classes, web pages, etc.

- Task focused interface (task system in the background - like JIRA, Bugzilla, etc.)

- Over 60 ALM tools supported

Contribution Workflow

See image from preso

Before: Patch based code reviews

- essentially a back and forth thing between contributors and intergrators (code did not compile, then a test did not pass, etc.) - typically, it used to take approximately 2 days to integrate a piece of code into one of the open source projects listed above

- Limited automation

- Difficult to trace changes

- cumbersome process

- Late feedback

- Code pushed to Gerrit (which acts as a GIT repository - with code review automation)

- Code changes then pulled from Hudson, build is done and Hudson vote back to Gerrit based on all required gates passed or not (build OK: vote +1, tests OK: vote +1, etc.); if a step fail, data flows back into developer IDEs mentioning for example that a certain test failed, etc. the feedback is almost instantaneous.

Demo: Collaboration

A complete demo was conducted to show the previously discussed tools integration. Very interesting even though it was Eclipse-based ( ;-) )

NOTE on Business Processes

It is possible to model business processes at Gerrit level. For example, all developers could have access rights to commit to Gerrit, but only a certain predefined user can commit to the central repository.

Awesome! How do we do it at home now?

Setting up the server (in that order)

- Install all the softwares and then:

- Clone GIT repository

- Initialize Gerrit

- Register and configure a Gerrit human user

- Register and configure a Build user (the automating user)

- Install Hudkins Git and Gerrit plugins

- Configure standard Hudkins build

- Configure Hudkins Gerrit build (integrate the review within the build)

Setting up the client (contributor - in that order)

- Install EGit, Mylyn Builds (Hudson), and Mylyn Reviews (Gerrit)

- Clone GIT repository (pull)

- Configure Gerrit as remote master

- There is no step 4! Done.

Setting up the client (committer - in that order)

- Configure a Mylyn builds Hudson repository

- Configure a Mylyn review Gerrit repository

- Create a task query for Gerrit

- There is no step 4! Done.

Visualizing Software Quality - Visualization Tools

This is the second part of a previous post found here. If you haven't done so yet, go read it. Now, as promised, here is a summary list of the tools which can be used to visualize various aspects of software quality. I will provide a short description for each kind of visualizations and for more detail, I strongly recommend you go visit Erik's blog at http://erik.doernenburg.com/.

For all of the following types of software quality visualization charts or graph, you must first collect and aggregate the metrics data. This can be done using static analysis tools, scripts or even Excel. What is important is defining what you want to measure, finding the best automated way to get the data (how) and aggregating the necessary measures to produce the following views. Each of the following views have different purposes however, they are all very easy to understand and give you a very simple way to see the quality of your software.

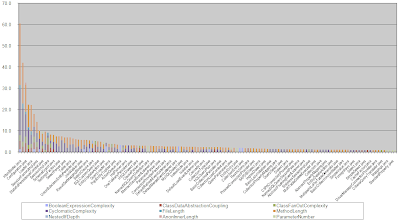

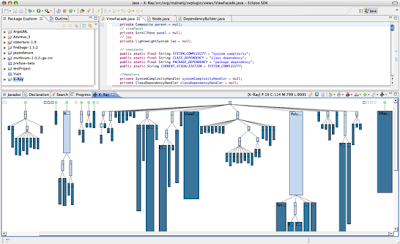

Toxicity Chart

The toxicity chart is a tool which can be used to visualize the internal quality of your software, more specifically, it can show you how truly horrible your code is. In the toxicity chart, each bar represents a class and the height of each bar is a composition of metrics showing you how toxic the class is. So, the higher the bar, the more horrible the class is. It can be an effective way to represent poor quality to less technically inclined people (e.g. management types).

An example of a metric which composes the bar could be the length of a method. To generate this measure, you must define a threshold for the specific metric which would then produce a proportional score. For example, if the line-of-code threshold for a method is 20 lines and a method contains 30 lines, its toxicity score would be 1.5 since the method is 1.5 times above the set threshold. For more details on the toxicity chart, read the following article.

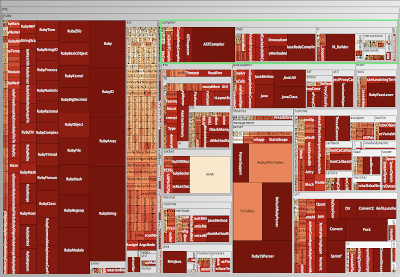

Metric Tree Maps

A metrics tree map visualizes the structure of the code by rendering the hierarchical package (namespace) structure as nested rectangles with parent packages encompassing child packages. (Excerpt taken from Erik's blog). For more details on the metric tree maps, go read the following article.

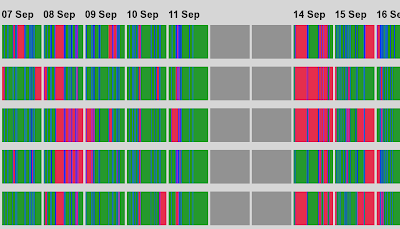

Visualizing Build Pain

The following graph shows a series of "build lines" representing the state of builds over time. Each build gets a line. This is very useful in continuous integration environments as it gives you a high level view of build related problems. Colour is used in the following way: green for good, red for broken, and blue for building. So, if you set this up on a big screen in a highly visible area of your office, people will know very quickly when many problems arise. As an added bonus, keeping the build lines from past days will give you an idea of how well the development teams have reduced the broken builds from the past. For more details on the build line graphs, go read the following article.

System Complexity View

System Complexity is a polymetric view that shows the classes of the system, organized in inheritance hierarchies (excerpt from Moose Technology). Each class is represented by a node:

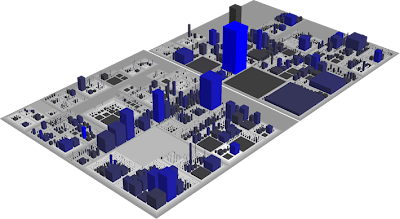

CodeCity 3D View

Finally, this type of visualization has to be the most awesome way to look at your software. CodeCity is written by Richard Wettel and is an integrated environment for software analysis, in which software systems are visualized as interactive, navigable 3D cities. The classes are represented as buildings in the city, while the packages are depicted as the districts in which the buildings reside. The visible properties of the city artifacts depict a set of chosen software metrics (excerpt from Moose Technologies). For more details on this, you can also go read the following article.

For all of the following types of software quality visualization charts or graph, you must first collect and aggregate the metrics data. This can be done using static analysis tools, scripts or even Excel. What is important is defining what you want to measure, finding the best automated way to get the data (how) and aggregating the necessary measures to produce the following views. Each of the following views have different purposes however, they are all very easy to understand and give you a very simple way to see the quality of your software.

Toxicity Chart

The toxicity chart is a tool which can be used to visualize the internal quality of your software, more specifically, it can show you how truly horrible your code is. In the toxicity chart, each bar represents a class and the height of each bar is a composition of metrics showing you how toxic the class is. So, the higher the bar, the more horrible the class is. It can be an effective way to represent poor quality to less technically inclined people (e.g. management types).

|

| Toxicity Chart |

An example of a metric which composes the bar could be the length of a method. To generate this measure, you must define a threshold for the specific metric which would then produce a proportional score. For example, if the line-of-code threshold for a method is 20 lines and a method contains 30 lines, its toxicity score would be 1.5 since the method is 1.5 times above the set threshold. For more details on the toxicity chart, read the following article.

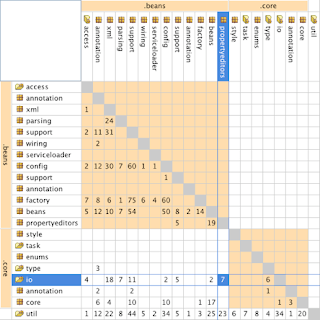

Dependency Structure Matrix

The following matrix is used to understand structure by showing dependencies between groups of classes such as packages (in Java) or namespaces (in C#). The resulting table can be used to uncover cyclic dependencies and level of coupling between these groups. For more details on the DSM, go read the following article.

|

| DSM |

Metric Tree Maps

A metrics tree map visualizes the structure of the code by rendering the hierarchical package (namespace) structure as nested rectangles with parent packages encompassing child packages. (Excerpt taken from Erik's blog). For more details on the metric tree maps, go read the following article.

|

| Metric Tree Map Polymetric View |

Visualizing Build Pain

The following graph shows a series of "build lines" representing the state of builds over time. Each build gets a line. This is very useful in continuous integration environments as it gives you a high level view of build related problems. Colour is used in the following way: green for good, red for broken, and blue for building. So, if you set this up on a big screen in a highly visible area of your office, people will know very quickly when many problems arise. As an added bonus, keeping the build lines from past days will give you an idea of how well the development teams have reduced the broken builds from the past. For more details on the build line graphs, go read the following article.

|

| Build Lines |

System Complexity is a polymetric view that shows the classes of the system, organized in inheritance hierarchies (excerpt from Moose Technology). Each class is represented by a node:

- width = the number of attributes of the class

- height = the number of methods of the class

- color = the number of lines of code of the class

|

| System Complexity Polymetric View |

CodeCity 3D View

Finally, this type of visualization has to be the most awesome way to look at your software. CodeCity is written by Richard Wettel and is an integrated environment for software analysis, in which software systems are visualized as interactive, navigable 3D cities. The classes are represented as buildings in the city, while the packages are depicted as the districts in which the buildings reside. The visible properties of the city artifacts depict a set of chosen software metrics (excerpt from Moose Technologies). For more details on this, you can also go read the following article.

|

| CodeCity Polymetric View |

Lesson Learned from Netflix in the Global Cloud [UPDATED]

Simply put, if you want to do Cloud, don't try to build one, find the best possible infrastructure web services platform in the could out there and use it! I'll try to give you a glimpse of what Adrian Cockcroft form Netflix presented this morning but omit the technical details as I will post the presentation link later in an updated version of this post.

So, is your company thinking of being in the Cloud or has it started trying to build? Only time will tell which path will #win, however, know that if you want to make the move now, AWS is readily available to anyone. A lot of the content from this presentation go into the technical details of their platform architecture on AWS and I invite you to come back to this post later today for a direct link to the presentation as it becomes available. [UPDATED: Slides Link]

In the meantime, check out The Netflix "Tech" Blog for some discussion on the subject.

If you don't know about Netflix, you might have been living under a rock for the past couple of years but just in case, Netflix provides services for watching TV and movies online to over 20 million users globally across multiple platforms most of which can instantly stream the content over the Internet. When Netflix decided to move its operation to the cloud, it decided to use a public Cloud. Why use the public Cloud you ask? Well... Netflix just couldn't build data centres fast enough and has decided to search for a robust and scalable Cloud offering to develop their platform on. The choice was set on Amazon Web Services (AWS). Partnering with AWS has allowed Netflix to be ~100% Cloud based! Some smaller back end bits are still not moved to the Cloud however, they are aggressively pushing to change that. The simple rational behind it all was that AWS has dedicated infrastructure support teams which Netflix did not, so the move was a no brainer.

|

| Netflix in the Cloud on AWS |

So, is your company thinking of being in the Cloud or has it started trying to build? Only time will tell which path will #win, however, know that if you want to make the move now, AWS is readily available to anyone. A lot of the content from this presentation go into the technical details of their platform architecture on AWS and I invite you to come back to this post later today for a direct link to the presentation as it becomes available. [UPDATED: Slides Link]

In the meantime, check out The Netflix "Tech" Blog for some discussion on the subject.

Dart, Structured Web Programming by Google

Gilad Bracha, software engineering from Google, is presenting an overview of Dart, a new programming language for structured web programming. Code example links are posted at the end.

Dart is a technology preview, so this it open to change and will definitely change. On today's Web, developing large scale applications is hard. It is hard to find the program structure, there is a lack of static types, no support for libraries, tool support is weak and startup performance is bad. Innovation is Essential. Dart tries to fills a vacuum; the competition is NOT JavaScript… but fragmented mobile platforms are. So, what is Dart?

You can try Dart online at: http://try.dartlang.org. Dart has a different type checker. In other programming languages, a conventional type-checker is a lobotomized theorem prover, i.e. it tries to prove the program obeys a type system. If it can't construct a proof the program is considered invalid; "Guilty until proven innocent". In Dart, you are "Innocent until prevent guilty".

In Dart, types are optional (more on optional types). During development, one can choose to validate types but by default, type annotations have no effect and no cost… the code runs free!

There are no threads in Dart. The world has suffered enough with threads. Dart has isolates. They are actor-like units or concurrency and security. Each isolate is conceptually a process, nothing is shared and all communication takes place via message passing. Isolates support concurrent execution.

On Dart performance, when translated to JavaScript, it should be at parity with handwritten JavaScript code. On the VM, it should be substantially better.

In conclusion, Dart is not done yet. Every feature of every programming language out there has been proposed on the Dart mailing list, however, Google has not taken any decision on these yet. By all means, go check out the preview, try it out and participate!

Some code examples

You can learn more about Dart at http://www.dartlang.org/

|

| Gilad Brache on Dart |

Dart is a technology preview, so this it open to change and will definitely change. On today's Web, developing large scale applications is hard. It is hard to find the program structure, there is a lack of static types, no support for libraries, tool support is weak and startup performance is bad. Innovation is Essential. Dart tries to fills a vacuum; the competition is NOT JavaScript… but fragmented mobile platforms are. So, what is Dart?

- A simple and unsurprising OO programming language

- Class-based single inheritance with interfaces

- Has optional static types

- Has real lexical scoping

- It is single-threaded

- Has a familiar syntax

You can try Dart online at: http://try.dartlang.org. Dart has a different type checker. In other programming languages, a conventional type-checker is a lobotomized theorem prover, i.e. it tries to prove the program obeys a type system. If it can't construct a proof the program is considered invalid; "Guilty until proven innocent". In Dart, you are "Innocent until prevent guilty".

In Dart, types are optional (more on optional types). During development, one can choose to validate types but by default, type annotations have no effect and no cost… the code runs free!

There are no threads in Dart. The world has suffered enough with threads. Dart has isolates. They are actor-like units or concurrency and security. Each isolate is conceptually a process, nothing is shared and all communication takes place via message passing. Isolates support concurrent execution.

|

| Dart Execution |

On Dart performance, when translated to JavaScript, it should be at parity with handwritten JavaScript code. On the VM, it should be substantially better.

In conclusion, Dart is not done yet. Every feature of every programming language out there has been proposed on the Dart mailing list, however, Google has not taken any decision on these yet. By all means, go check out the preview, try it out and participate!

Some code examples

- Hello World - http://try.dartlang.org/s/bk8g

- Generics - http://try.dartlang.org/s/dyQh

- Isolates - http://try.dartlang.org/s/cckM

You can learn more about Dart at http://www.dartlang.org/

Subscribe to:

Posts (Atom)